Introduction

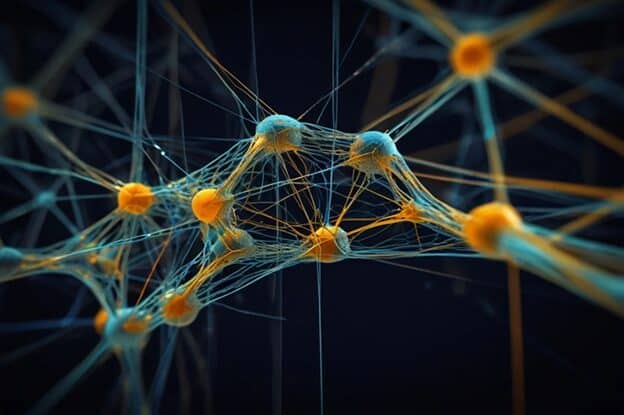

In recent years, artificial intelligence (AI) has become an integral part of our daily lives, from virtual assistants like Siri and Alexa to more complex systems like self-driving cars and sophisticated medical diagnostics. At the core of many of these advancements lies a powerful computational model known as the neural network. But what exactly is a neural network, and how does it function?

What Is a Neural Network?

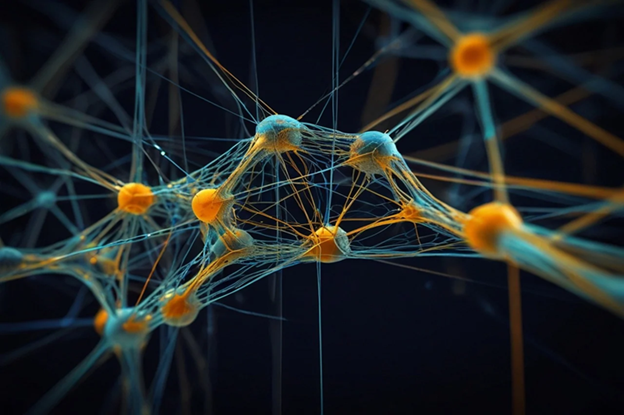

A neural network is a computational model inspired by the way the human brain operates. It consists of interconnected nodes, or “neurons,” that work together to process and analyze data. Neural networks are a subset of machine learning, which is a broader field of artificial intelligence that focuses on the development of algorithms that allow computers to learn from and make predictions based on data.

Structure of a Neural Network

At its most basic level, a neural network consists of three main layers:

- Input Layer: This is the first layer of the network, where data is fed into the system. Each neuron in this layer represents a feature or attribute of the input data. For example, in an image recognition task, the pixels of the image would serve as inputs.

- Hidden Layer(s): Between the input and output layers, there can be one or more hidden layers. These layers perform computations and transformations on the input data. Each neuron in these layers receives inputs from the previous layer, applies a weighted sum followed by an activation function, and passes the result to the next layer. The number of hidden layers and neurons can vary depending on the complexity of the task, with deeper networks often being more capable of capturing intricate patterns.

- Output Layer: The final layer of the neural network, where the model produces its predictions or classifications. The number of neurons in this layer corresponds to the number of possible outputs. For instance, in a binary classification task, there would typically be one neuron in the output layer representing the two possible classes.

How Neural Networks Work

The process of training a neural network involves adjusting the weights of the connections between neurons based on the input data and the corresponding output. This is typically done using a technique called back propagation, which is a supervised learning algorithm. Here’s how it works:

- Forward Pass: The input data is fed into the network, and each neuron processes the data by applying its weights and activation function to produce an output.

- Loss Calculation: The output of the network is compared to the actual target value (ground truth), and a loss function is used to quantify the difference between the predicted output and the target.

- Backward Pass: The network then calculates the gradient of the loss function with respect to each weight, indicating how much each weight should be adjusted to minimize the loss. This information is propagated backward through the network, updating the weights accordingly.

- Iteration: The process of forward pass, loss calculation, and backward pass is repeated for many iterations (epochs) over the training dataset until the network learns to make accurate predictions.

Applications of Neural Networks

- Image Recognition: Used in applications like facial recognition and autonomous vehicles to identify objects within images.

- Natural Language Processing (NLP): Powers applications such as language translation, sentiment analysis, and chatbots by processing and understanding human language.

- Speech Recognition: Transforms spoken language into text, enabling voice-activated systems and virtual assistants.

- Healthcare: Assists in diagnosing diseases by analyzing medical images or predicting patient outcomes based on historical data.

- Finance: Employed for fraud detection, algorithmic trading, and credit scoring by analyzing transaction patterns and market trends.

The Future of Neural Networks

As technology continues to advance, so too does the potential for neural networks. Research is ongoing to develop more efficient architectures, such as convolutional neural networks (CNNs) for image processing and recurrent neural networks (RNNs) for sequence data. Additionally, the advent of large language models (LLMs) showcases the capabilities of neural networks in generating coherent and contextually relevant text.

However, challenges remain, including the need for vast amounts of data, computational resources, and concerns related to interpretability and bias. As we continue to explore and refine neural network technology, we edge closer to unlocking even greater capabilities in artificial intelligence.

Conclusion

Neural networks have revolutionized the way we approach problem-solving in various fields, mimicking the human brain’s ability to learn and adapt. As research and technology progress, neural networks are likely to play an increasingly vital role in shaping the future of AI, bringing us closer to machines that can think and learn like humans. Understanding their structure, functionality, and applications is essential for anyone looking to grasp the complexities of modern artificial intelligence.

+ There are no comments

Add yours